Parallel Hyper-Parameter Optimization in Python

Tuning the specific hyper-parameters used in many machine learning algorithms is as much of an art as it is a science.

Thankfully, we can use a few tools to increase our ability to do it effectively. One of which is Grid Search, which is the process of creating a "Grid" of possible hyper-parameter values and then testing each possible combination of values via k-folds Cross Validation and choosing the "best" combination based on performance on a user-defined metric such as accuracy, area under the roc curve or sensitivity.

This process is very computationally expensive, especially as the number of hyper-parameters involved increases. We can significantly reduce the time taken to perform grid search by using parallel computing if we have a multi-core CPU or a CPU that supports hyper-threading. The idea of parallel computing is sometimes intimidating to even veteran programmers, thankfully the work of parallel scaling can be done automatically through SK-Learn's GridSearchCV module.

Output: 77.9% Accuracy

Not bad, but lets see if tweaking some parameters has any effect.

SK-Learn's Decision Tree Classifier has quite a few hyper-parameters that can be tweaked, lets start by looking at two of them with some possible values:

Criterion

Description: The function to measure the quality of a split

Possible Values: "Gini", "Entropy"

Max Depth

Description: The maximum depth of the tree. If None, then nodes are expanded until all leaves are pure or until all leaves contain less than min_samples_split samples.

Possible Values: "None", Int (we will consider 2, 4, 6, 8 and 10)

(other parameters listed here)

We will create a "parameter grid" as a dictionary of possible values for these hyper-parameters:

Next we will pass the parameter grid to our GridSearchCV function that will automatically run the classifier with each possible combination of parameters:

Output:

0.772 (+/-0.061) for {'criterion': 'gini', 'max_depth': None}

0.312 (+/-0.027) for {'criterion': 'gini', 'max_depth': 2}

0.546 (+/-0.075) for {'criterion': 'gini', 'max_depth': 4}

0.706 (+/-0.089) for {'criterion': 'gini', 'max_depth': 6}

0.763 (+/-0.104) for {'criterion': 'gini', 'max_depth': 8}

0.778 (+/-0.080) for {'criterion': 'gini', 'max_depth': 10}

0.785 (+/-0.035) for {'criterion': 'entropy', 'max_depth': None}

0.354 (+/-0.012) for {'criterion': 'entropy', 'max_depth': 2}

0.625 (+/-0.050) for {'criterion': 'entropy', 'max_depth': 4}

0.763 (+/-0.033) for {'criterion': 'entropy', 'max_depth': 6}

0.787 (+/-0.043) for {'criterion': 'entropy', 'max_depth': 8}

0.798 (+/-0.036) for {'criterion': 'entropy', 'max_depth': 10}

As you can see when criterion: 'entropy' and max_depth: '10' we see the highest accuracy (79.8%)

Lets increase the size of our parameter grid:

Thankfully, we can use a few tools to increase our ability to do it effectively. One of which is Grid Search, which is the process of creating a "Grid" of possible hyper-parameter values and then testing each possible combination of values via k-folds Cross Validation and choosing the "best" combination based on performance on a user-defined metric such as accuracy, area under the roc curve or sensitivity.

This process is very computationally expensive, especially as the number of hyper-parameters involved increases. We can significantly reduce the time taken to perform grid search by using parallel computing if we have a multi-core CPU or a CPU that supports hyper-threading. The idea of parallel computing is sometimes intimidating to even veteran programmers, thankfully the work of parallel scaling can be done automatically through SK-Learn's GridSearchCV module.

Writing Code

We will use the "digits" dataset and DecisionTreeClassifier from SK-Learn in this example:Output: 77.9% Accuracy

Not bad, but lets see if tweaking some parameters has any effect.

SK-Learn's Decision Tree Classifier has quite a few hyper-parameters that can be tweaked, lets start by looking at two of them with some possible values:

Criterion

Description: The function to measure the quality of a split

Possible Values: "Gini", "Entropy"

Max Depth

Description: The maximum depth of the tree. If None, then nodes are expanded until all leaves are pure or until all leaves contain less than min_samples_split samples.

Possible Values: "None", Int (we will consider 2, 4, 6, 8 and 10)

(other parameters listed here)

We will create a "parameter grid" as a dictionary of possible values for these hyper-parameters:

Next we will pass the parameter grid to our GridSearchCV function that will automatically run the classifier with each possible combination of parameters:

Output:

0.772 (+/-0.061) for {'criterion': 'gini', 'max_depth': None}

0.312 (+/-0.027) for {'criterion': 'gini', 'max_depth': 2}

0.546 (+/-0.075) for {'criterion': 'gini', 'max_depth': 4}

0.706 (+/-0.089) for {'criterion': 'gini', 'max_depth': 6}

0.763 (+/-0.104) for {'criterion': 'gini', 'max_depth': 8}

0.778 (+/-0.080) for {'criterion': 'gini', 'max_depth': 10}

0.785 (+/-0.035) for {'criterion': 'entropy', 'max_depth': None}

0.354 (+/-0.012) for {'criterion': 'entropy', 'max_depth': 2}

0.625 (+/-0.050) for {'criterion': 'entropy', 'max_depth': 4}

0.763 (+/-0.033) for {'criterion': 'entropy', 'max_depth': 6}

0.787 (+/-0.043) for {'criterion': 'entropy', 'max_depth': 8}

0.798 (+/-0.036) for {'criterion': 'entropy', 'max_depth': 10}

As you can see when criterion: 'entropy' and max_depth: '10' we see the highest accuracy (79.8%)

Lets increase the size of our parameter grid:

Problems

Now we could run this as is and it would work, however; there are 2 problems with running a grid of this size.Output size:

This is a 2x7x7x3x4 grid that will result in: 1,176 combinations

Rather than read the entire output results, we will use the "best_score_" and "best_params_" functions:

Speed:

Running a full 3-fold cross-validation on each of the 1,176 combinations will result in fitting the classifier 3,528 times! This can get seriously slow, so we will set the "n_jobs" field to "-1" which allows grid search to use every available core in parallel to speed up the process:

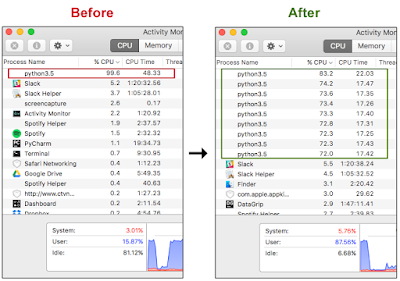

A quick look at Activity Monitor confirms that the script is running on all available cores:

|

| Running on a quad-core i7 with hyper-threading enabled |

Final Output:

It is important to note that we only analyzed a relatively small group of hyper-parameters here, and typically you should spend a significant amount of time tuning your grid to find a truly optimal configuration. Nevertheless we achieved the following results:

Best Score:

80.1% Accuracy (+2.2%)

Best Parameters:

'min_samples_leaf': 1,

'max_depth': 8,

'max_features': None,

'criterion': 'entropy',

'min_samples_split': 2

Very amazing blog, Thanks for giving me new useful information.

ReplyDeletewebdriver architecture

advantage of angularjs

benefits of aws certification

android oreo

Enjoyed reading the article above, really explains everything in detail, the article is very interesting and effective.

ReplyDeletePython Training Institute in South Delhi

Awesome blog. I enjoyed reading your articles. This is truly a great read for me. I have bookmarked it and I am looking forward to reading new articles. Keep up the good work!

ReplyDeletePython training course in Delhi

Nice post. Thanks for share.

ReplyDeletedata analysis training course london

Post is really supportive to all of us. Eager that these kind of information you post in future also. Otherwise if any One Want Experience Certificate for Fill your Career Gap So Contact Us-9599119376 Or Visit Website.

ReplyDeleteBest Consultant for Experience Certificate Providers in Bangalore, India

Excellent article for the people who need information about this course.

ReplyDeleteBest Company for Experience Certificate Providers in Chennai, India

Excellent and very cool idea and great content of different kinds of the valuable information’s.

ReplyDeleteGenuine Fake Experience Certificate Providers in Hyderabad, India

This is my first time visit here. From the tons of comments on your articles. I guess I am not only one having all the enjoyment right here.

ReplyDeleteComplete Python Programming Training Course in Delhi, India

Python training institute in delhi

Python training Course in delhi

I like your blog it is very knowledable and I got very usefull from your blog. Keep writing this type of blogs . If anyone want to get expercience in Delhi can contact me at - 9599119376 or can visit our website at

ReplyDeleteExperience Certificate In Noida

Experience Certificate In Chennai

Experience Certificate In Gurugoan

Data analytics is important because it helps businesses optimize their performances. Implementing it into the business model means companies can help reduce costs by identifying more efficient ways of doing business and by storing large amounts of data. inetSoft

ReplyDelete

ReplyDeleteJubilant to read your blog. One of the best I have gone through. If anyone want to get experience certificate in Chennai. Here the Dreamsoft is providing the genuine experience certificate in Chennai. Dreamsoft is the 20 years old consultancy providing experience certificate. You can contact at the 9599119376 or can go to our website at https://experiencecertificates.com/experience-certificate-provider-in-chennai.html